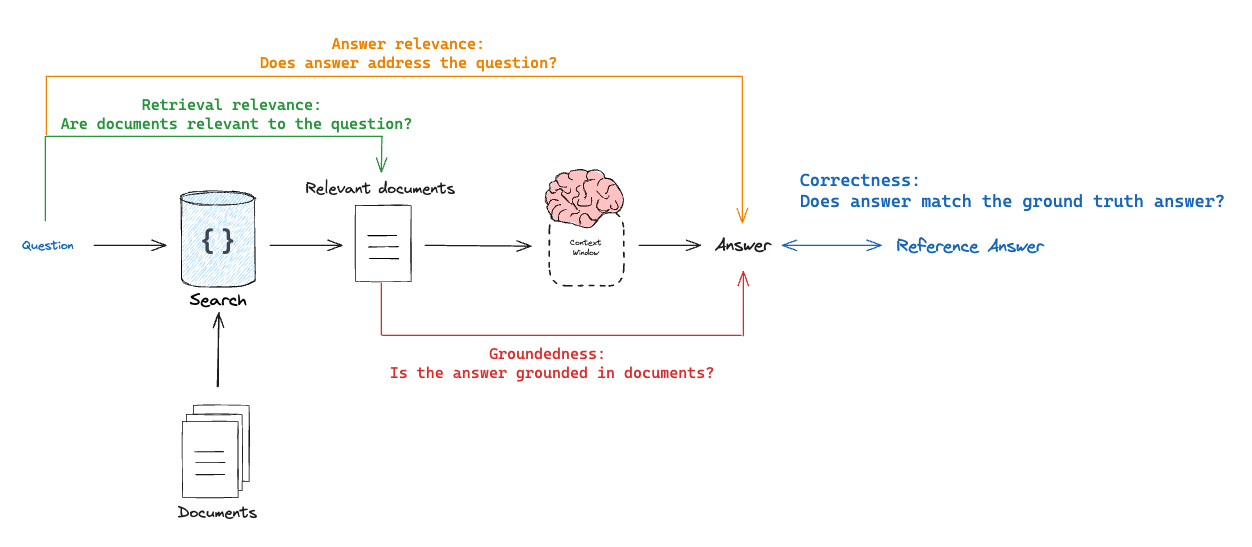

from langchain_community.document_loaders import WebBaseLoaderfrom langchain_core.vectorstores import InMemoryVectorStorefrom langchain_openai import ChatOpenAI, OpenAIEmbeddingsfrom langchain_text_splitters import RecursiveCharacterTextSplitterfrom langsmith import Client, traceablefrom typing_extensions import Annotated, TypedDict# List of URLs to load documents fromurls = [ "https://lilianweng.github.io/posts/2023-06-23-agent/", "https://lilianweng.github.io/posts/2023-03-15-prompt-engineering/", "https://lilianweng.github.io/posts/2023-10-25-adv-attack-llm/",]# Load documents from the URLsdocs = [WebBaseLoader(url).load() for url in urls]docs_list = [item for sublist in docs for item in sublist]# Initialize a text splitter with specified chunk size and overlaptext_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder( chunk_size=250, chunk_overlap=0)# Split the documents into chunksdoc_splits = text_splitter.split_documents(docs_list)# Add the document chunks to the "vector store" using OpenAIEmbeddingsvectorstore = InMemoryVectorStore.from_documents( documents=doc_splits, embedding=OpenAIEmbeddings(),)# With langchain we can easily turn any vector store into a retrieval component:retriever = vectorstore.as_retriever(k=6)llm = ChatOpenAI(model="gpt-4o", temperature=1)# Add decorator so this function is traced in LangSmith@traceable()def rag_bot(question: str) -> dict: # langchain Retriever will be automatically traced docs = retriever.invoke(question) docs_string = "".join(doc.page_content for doc in docs) instructions = f"""You are a helpful assistant who is good at analyzing source information and answering questions. Use the following source documents to answer the user's questions. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.Documents:{docs_string}""" # langchain ChatModel will be automatically traced ai_msg = llm.invoke( [ {"role": "system", "content": instructions}, {"role": "user", "content": question}, ], ) return {"answer": ai_msg.content, "documents": docs}client = Client()# Define the examples for the datasetexamples = [ { "inputs": {"question": "How does the ReAct agent use self-reflection? "}, "outputs": {"answer": "ReAct integrates reasoning and acting, performing actions - such tools like Wikipedia search API - and then observing / reasoning about the tool outputs."}, }, { "inputs": {"question": "What are the types of biases that can arise with few-shot prompting?"}, "outputs": {"answer": "The biases that can arise with few-shot prompting include (1) Majority label bias, (2) Recency bias, and (3) Common token bias."}, }, { "inputs": {"question": "What are five types of adversarial attacks?"}, "outputs": {"answer": "Five types of adversarial attacks are (1) Token manipulation, (2) Gradient based attack, (3) Jailbreak prompting, (4) Human red-teaming, (5) Model red-teaming."}, },]# Create the dataset and examples in LangSmithdataset_name = "Lilian Weng Blogs Q&A"if not client.has_dataset(dataset_name=dataset_name): dataset = client.create_dataset(dataset_name=dataset_name) client.create_examples( dataset_id=dataset.id, examples=examples )# Grade output schemaclass CorrectnessGrade(TypedDict): # Note that the order in the fields are defined is the order in which the model will generate them. # It is useful to put explanations before responses because it forces the model to think through # its final response before generating it: explanation: Annotated[str, ..., "Explain your reasoning for the score"] correct: Annotated[bool, ..., "True if the answer is correct, False otherwise."]# Grade promptcorrectness_instructions = """You are a teacher grading a quiz. You will be given a QUESTION, the GROUND TRUTH (correct) ANSWER, and the STUDENT ANSWER. Here is the grade criteria to follow:(1) Grade the student answers based ONLY on their factual accuracy relative to the ground truth answer. (2) Ensure that the student answer does not contain any conflicting statements.(3) It is OK if the student answer contains more information than the ground truth answer, as long as it is factually accurate relative to the ground truth answer.Correctness:A correctness value of True means that the student's answer meets all of the criteria.A correctness value of False means that the student's answer does not meet all of the criteria.Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct. Avoid simply stating the correct answer at the outset."""# Grader LLMgrader_llm = ChatOpenAI(model="gpt-4o", temperature=0).with_structured_output( CorrectnessGrade, method="json_schema", strict=True)def correctness(inputs: dict, outputs: dict, reference_outputs: dict) -> bool: """An evaluator for RAG answer accuracy""" answers = f"""\QUESTION: {inputs['question']}GROUND TRUTH ANSWER: {reference_outputs['answer']}STUDENT ANSWER: {outputs['answer']}""" # Run evaluator grade = grader_llm.invoke( [ {"role": "system", "content": correctness_instructions}, {"role": "user", "content": answers}, ] ) return grade["correct"]# Grade output schemaclass RelevanceGrade(TypedDict): explanation: Annotated[str, ..., "Explain your reasoning for the score"] relevant: Annotated[ bool, ..., "Provide the score on whether the answer addresses the question" ]# Grade promptrelevance_instructions = """You are a teacher grading a quiz. You will be given a QUESTION and a STUDENT ANSWER. Here is the grade criteria to follow:(1) Ensure the STUDENT ANSWER is concise and relevant to the QUESTION(2) Ensure the STUDENT ANSWER helps to answer the QUESTIONRelevance:A relevance value of True means that the student's answer meets all of the criteria.A relevance value of False means that the student's answer does not meet all of the criteria.Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct. Avoid simply stating the correct answer at the outset."""# Grader LLMrelevance_llm = ChatOpenAI(model="gpt-4o", temperature=0).with_structured_output( RelevanceGrade, method="json_schema", strict=True)# Evaluatordef relevance(inputs: dict, outputs: dict) -> bool: """A simple evaluator for RAG answer helpfulness.""" answer = f"QUESTION: {inputs['question']}\nSTUDENT ANSWER: {outputs['answer']}" grade = relevance_llm.invoke( [ {"role": "system", "content": relevance_instructions}, {"role": "user", "content": answer}, ] ) return grade["relevant"]# Grade output schemaclass GroundedGrade(TypedDict): explanation: Annotated[str, ..., "Explain your reasoning for the score"] grounded: Annotated[ bool, ..., "Provide the score on if the answer hallucinates from the documents" ]# Grade promptgrounded_instructions = """You are a teacher grading a quiz. You will be given FACTS and a STUDENT ANSWER. Here is the grade criteria to follow:(1) Ensure the STUDENT ANSWER is grounded in the FACTS. (2) Ensure the STUDENT ANSWER does not contain "hallucinated" information outside the scope of the FACTS.Grounded:A grounded value of True means that the student's answer meets all of the criteria.A grounded value of False means that the student's answer does not meet all of the criteria.Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct. Avoid simply stating the correct answer at the outset."""# Grader LLMgrounded_llm = ChatOpenAI(model="gpt-4o", temperature=0).with_structured_output( GroundedGrade, method="json_schema", strict=True)# Evaluatordef groundedness(inputs: dict, outputs: dict) -> bool: """A simple evaluator for RAG answer groundedness.""" doc_string = "\n\n".join(doc.page_content for doc in outputs["documents"]) answer = f"FACTS: {doc_string}\nSTUDENT ANSWER: {outputs['answer']}" grade = grounded_llm.invoke( [ {"role": "system", "content": grounded_instructions}, {"role": "user", "content": answer}, ] ) return grade["grounded"]# Grade output schemaclass RetrievalRelevanceGrade(TypedDict): explanation: Annotated[str, ..., "Explain your reasoning for the score"] relevant: Annotated[ bool, ..., "True if the retrieved documents are relevant to the question, False otherwise", ]# Grade promptretrieval_relevance_instructions = """You are a teacher grading a quiz. You will be given a QUESTION and a set of FACTS provided by the student. Here is the grade criteria to follow:(1) You goal is to identify FACTS that are completely unrelated to the QUESTION(2) If the facts contain ANY keywords or semantic meaning related to the question, consider them relevant(3) It is OK if the facts have SOME information that is unrelated to the question as long as (2) is metRelevance:A relevance value of True means that the FACTS contain ANY keywords or semantic meaning related to the QUESTION and are therefore relevant.A relevance value of False means that the FACTS are completely unrelated to the QUESTION.Explain your reasoning in a step-by-step manner to ensure your reasoning and conclusion are correct. Avoid simply stating the correct answer at the outset."""# Grader LLMretrieval_relevance_llm = ChatOpenAI( model="gpt-4o", temperature=0).with_structured_output(RetrievalRelevanceGrade, method="json_schema", strict=True)def retrieval_relevance(inputs: dict, outputs: dict) -> bool: """An evaluator for document relevance""" doc_string = "\n\n".join(doc.page_content for doc in outputs["documents"]) answer = f"FACTS: {doc_string}\nQUESTION: {inputs['question']}" # Run evaluator grade = retrieval_relevance_llm.invoke( [ {"role": "system", "content": retrieval_relevance_instructions}, {"role": "user", "content": answer}, ] ) return grade["relevant"]def target(inputs: dict) -> dict: return rag_bot(inputs["question"])experiment_results = client.evaluate( target, data=dataset_name, evaluators=[correctness, groundedness, relevance, retrieval_relevance], experiment_prefix="rag-doc-relevance", metadata={"version": "LCEL context, gpt-4-0125-preview"},)# Explore results locally as a dataframe if you have pandas installed# experiment_results.to_pandas()