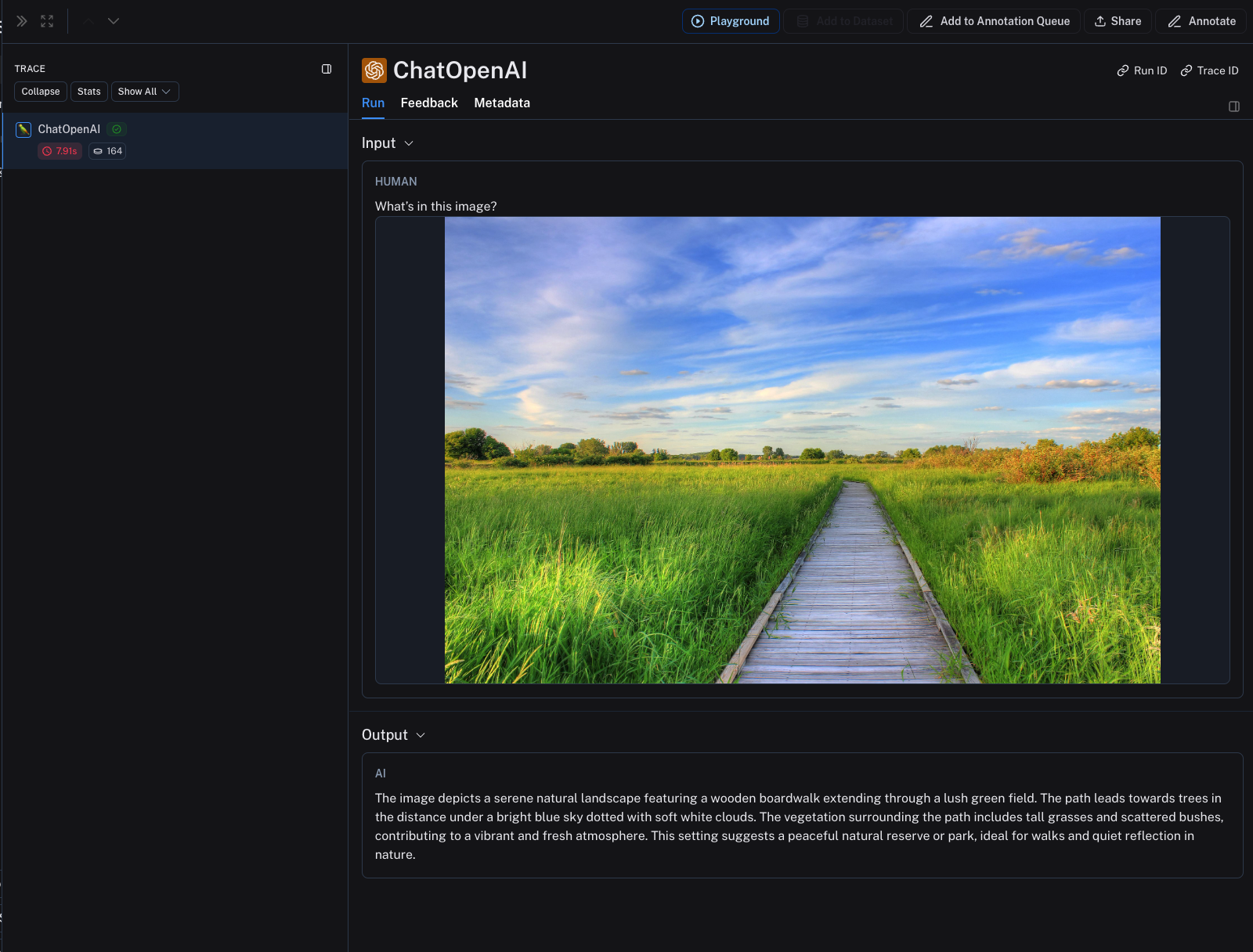

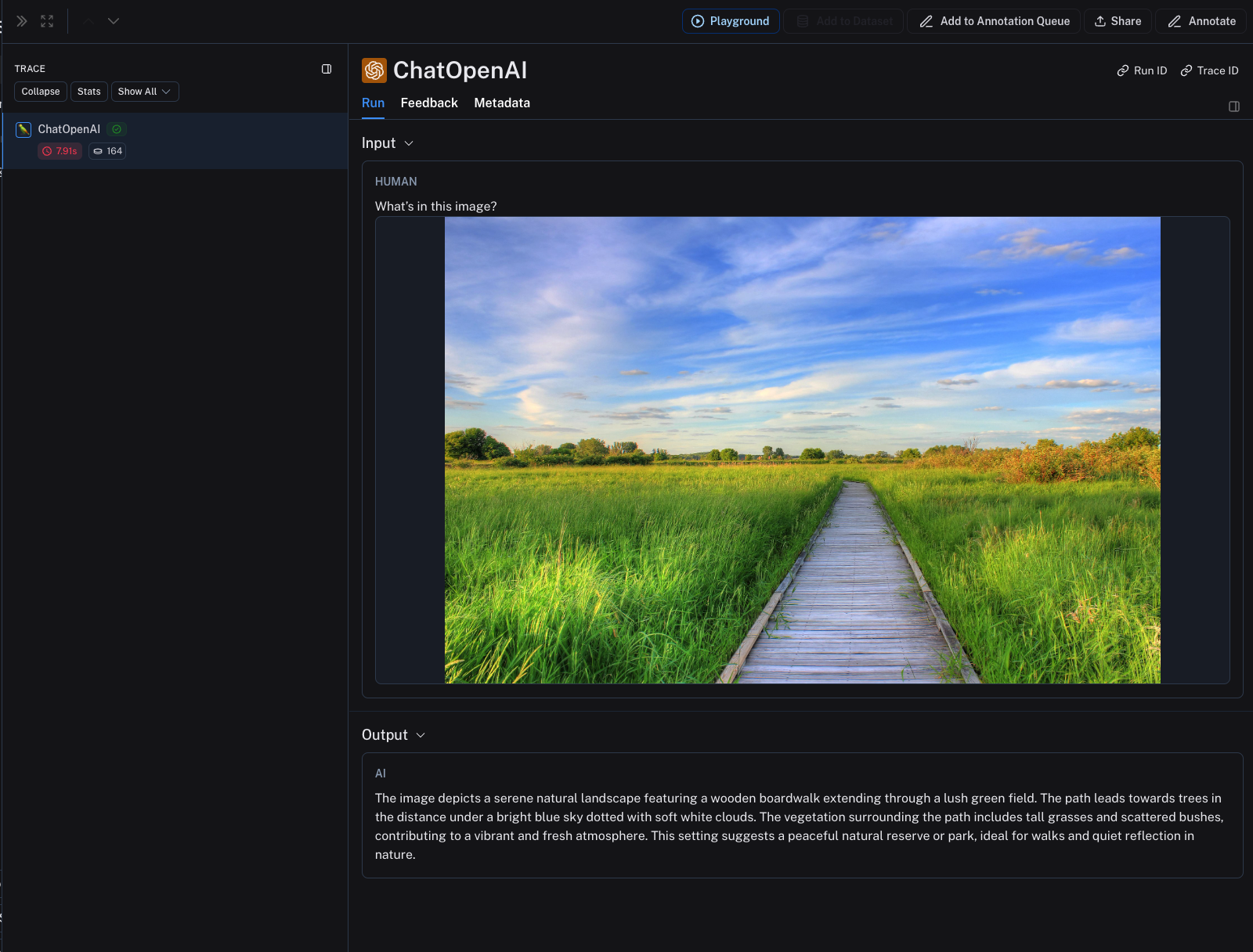

wrap_openai/ wrapOpenAI in Python or TypeScript respectively and pass an image URL or base64 encoded image as part of the input.

- Python

- TypeScript

wrap_openai/ wrapOpenAI in Python or TypeScript respectively and pass an image URL or base64 encoded image as part of the input.