Model Configurations

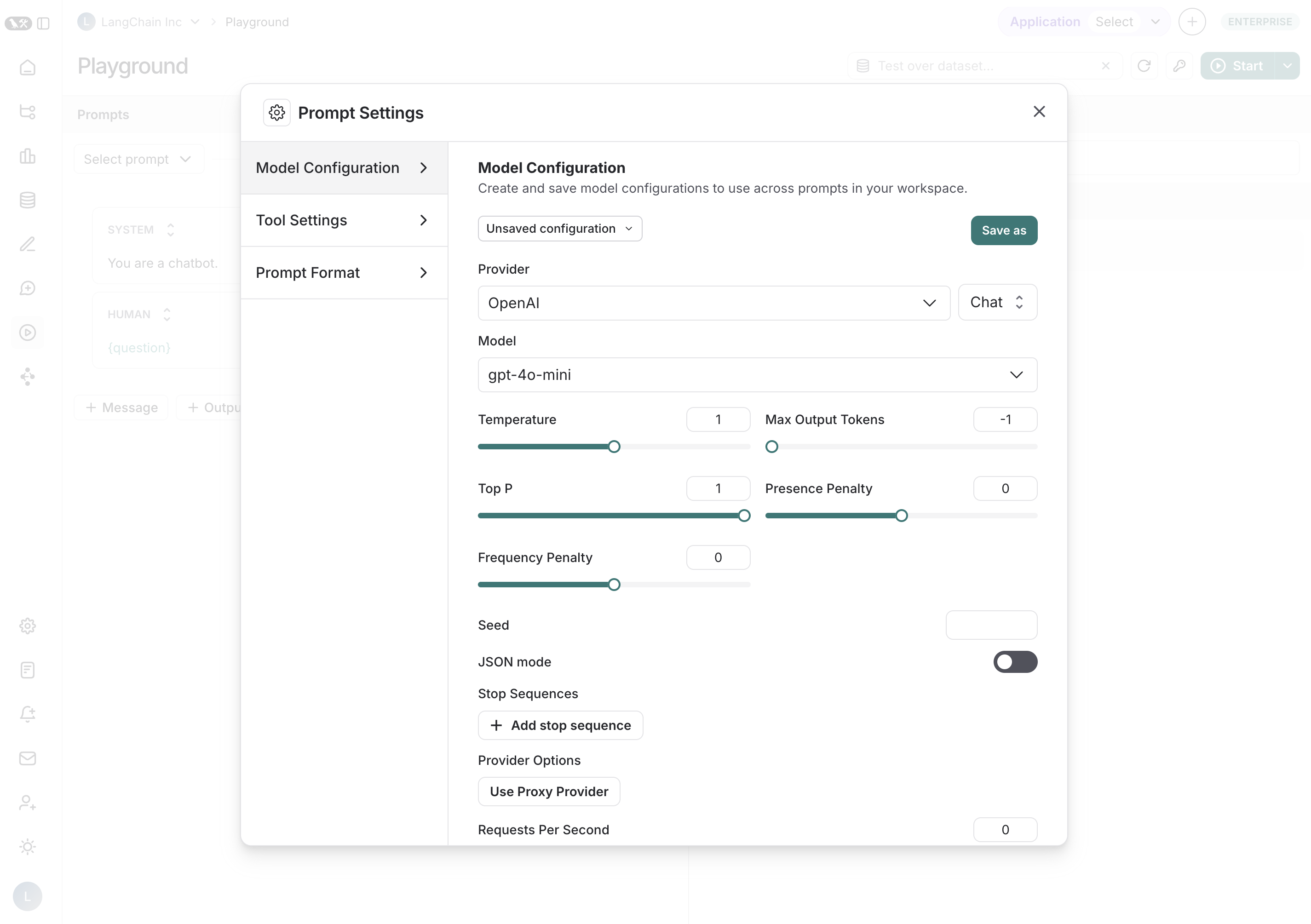

Model configurations are the set of parameters against which your prompt is run. For example, they include the provider, model, and temperature, among others. The LangSmith playground allows you to save and manage your model configurations, making it easy to reuse preferred settings across multiple prompts and sessions.Creating Saved Configurations

- Adjust the model configuration as desired

- Click the

Save Asbutton in the top bar - Enter a name and optional description for your configuration and confirm.

Model configuration dropdown.

Default Configurations

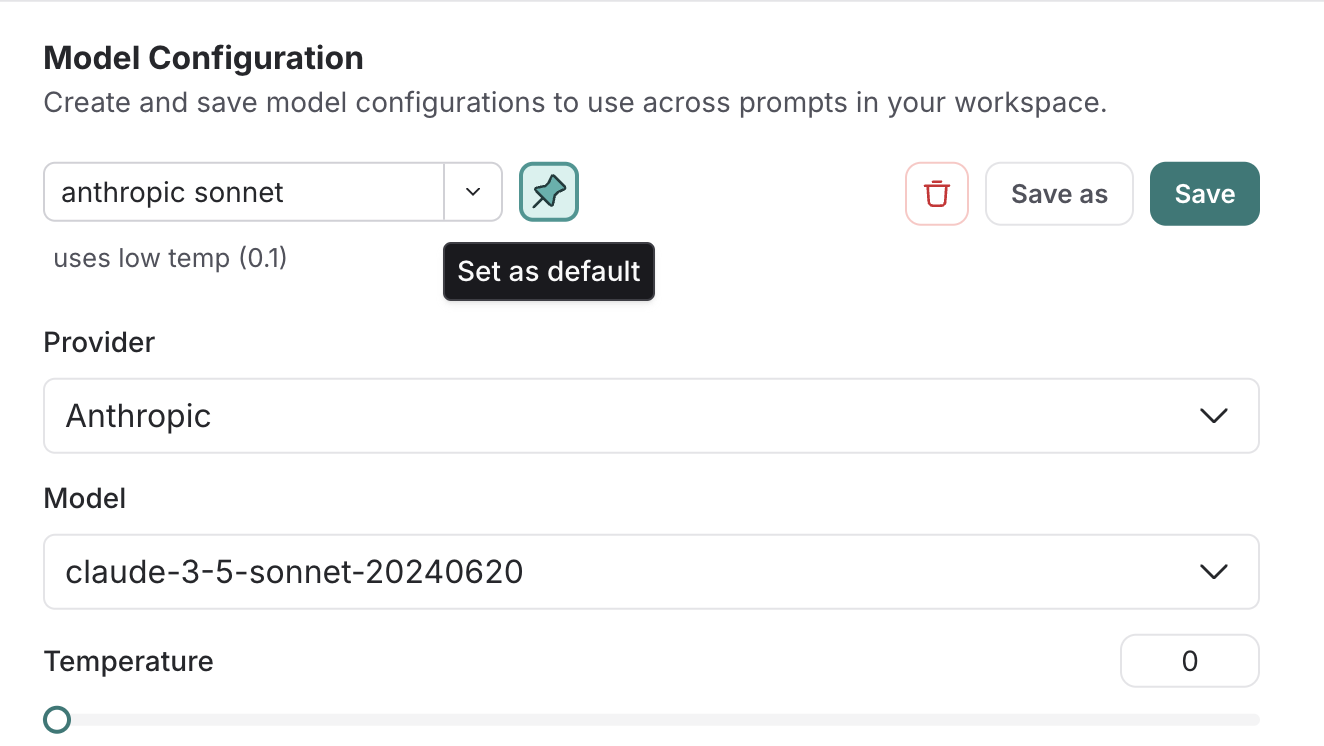

Once you have created a saved configuration, you can optionally set it as your default, so any new prompt you create will automatically use this configuration. To set a configuration as your default, click theSet as default button next to the dropdown.

Editing Configurations

- To rename or update the description: Click the configuration name or description and make your changes.

- To update the current configuration’s parameters: Make any desired to the parameters and click the

Savebutton at the top.

Deleting Configurations

- Select the configuration you want to remove

- Click the trash can icon to delete it

Tool Settings

Tools enable your LLM to perform tasks like searching the web, looking up information, and more. Here you can manage the ways your LLM can utilize and access the tools you have defined in your prompt. Learn more about tools here.Prompt Formatting

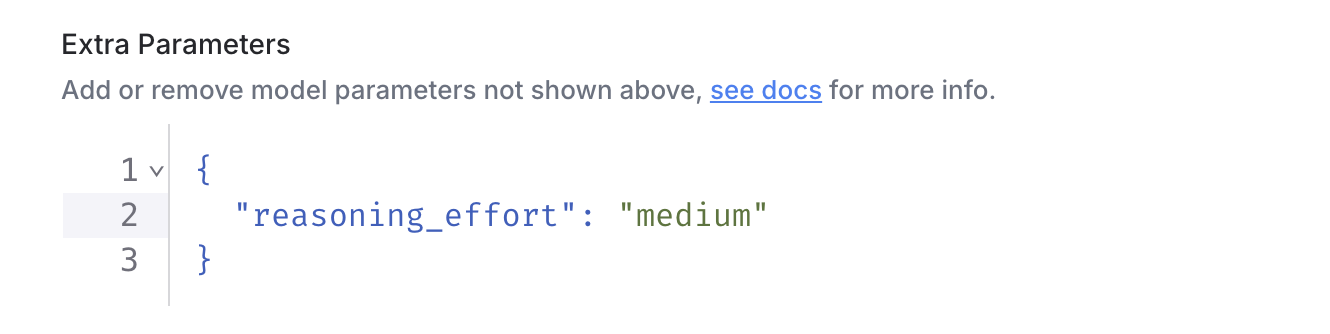

For information on chat and completion prompts, see here. For information about prompt templating and using variables, see here.Extra Parameters

The Extra Parameters field allows you to pass additional model parameters that aren’t directly supported in the LangSmith interface. This is particularly useful in two scenarios:- When model providers release new parameters that haven’t yet been integrated into the LangSmith interface. You can specify these parameters in JSON format to use them right away.

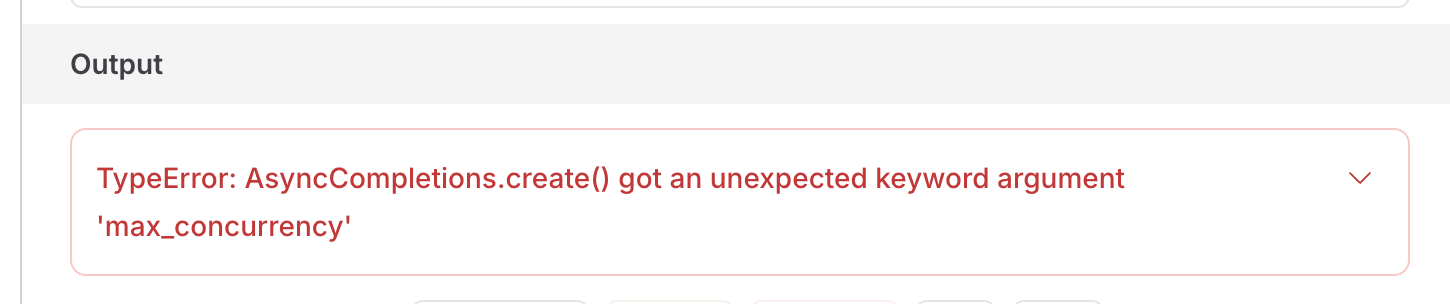

- When troubleshooting parameter-related errors in the playground. If you receive an error about unnecessary parameters (more common when using LangChainJS for run tracing), you can use this field to remove the extra parameters.