- Built-in tools: Pre-configured tools provided by model providers (like OpenAI and Anthropic) that are ready to use. These include capabilities like web search, code interpretation, and more.

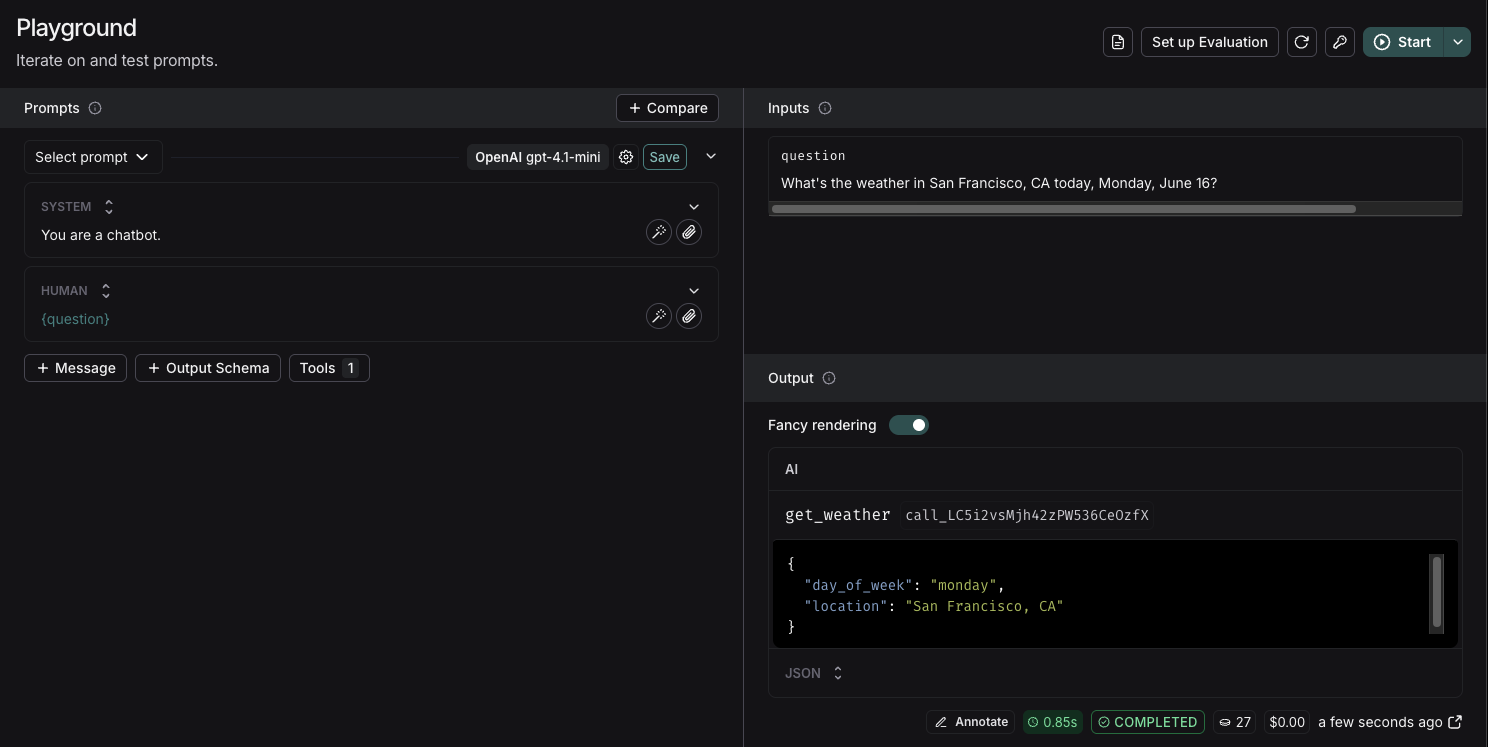

- Custom tools: Functions you define to perform specific tasks. These are useful when you need to integrate with your own systems or create specialized functionality. When you define custom tools within the LangSmith Playground, you can verify that the model correctly identifies and calls these tools with the correct arguments. Soon we plan to support executing these custom tool calls directly.

When to use tools

- Use built-in tools when you need common capabilities like web search or code interpretation. These are built and maintained by the model providers.

-

Use custom tools when you want to test and validate your own tool designs, including:

- Validating which tools the model chooses to use and seeing the specific arguments it provides in tool calls

- Simulating tool interactions

Built-in tools

The LangSmith Playground has native support for a variety of tools from OpenAI and Anthropic. If you want to use a tool that isn’t explicitly listed in the Playground, you can still add it by manually specifying itstype and any required arguments.

OpenAI Tools

- Web search: Search the web for real-time information

- Image generation: Generate images based on a text prompt

- MCP: Gives the model access to tools hosted on a remote MCP server

- View all OpenAI tools

Anthropic Tools

Adding and using tools

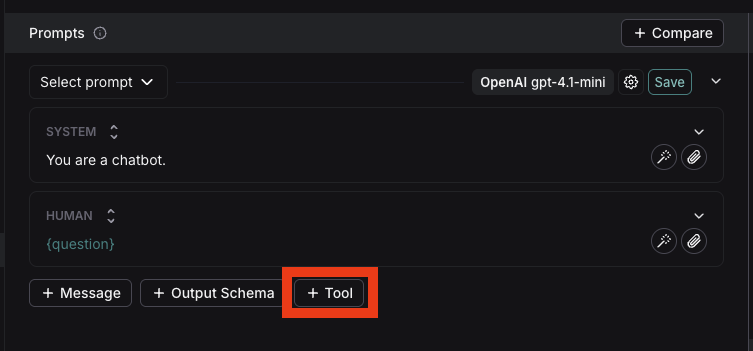

Add a tool

To add a tool to your prompt, click the+ Tool button at the bottom of the prompt editor.

Use a built-in tool

- In the tool section, select the built-in tool you want to use. You’ll only see the tools that are compatible with the provider and model you’ve chosen.

- When the model calls the tool, the playground will display the response

Create a custom tool

To create a custom tool, you’ll need to provide:- Name: A descriptive name for your tool

- Description: Clear explanation of what the tool does

- Arguments: The inputs your tool requires

Note: When running a custom tool in the playground, the model will respond with a JSON object containing the tool name and the tool call. Currently, there’s no way to connect this to a hosted tool via MCP.

Note: When running a custom tool in the playground, the model will respond with a JSON object containing the tool name and the tool call. Currently, there’s no way to connect this to a hosted tool via MCP.

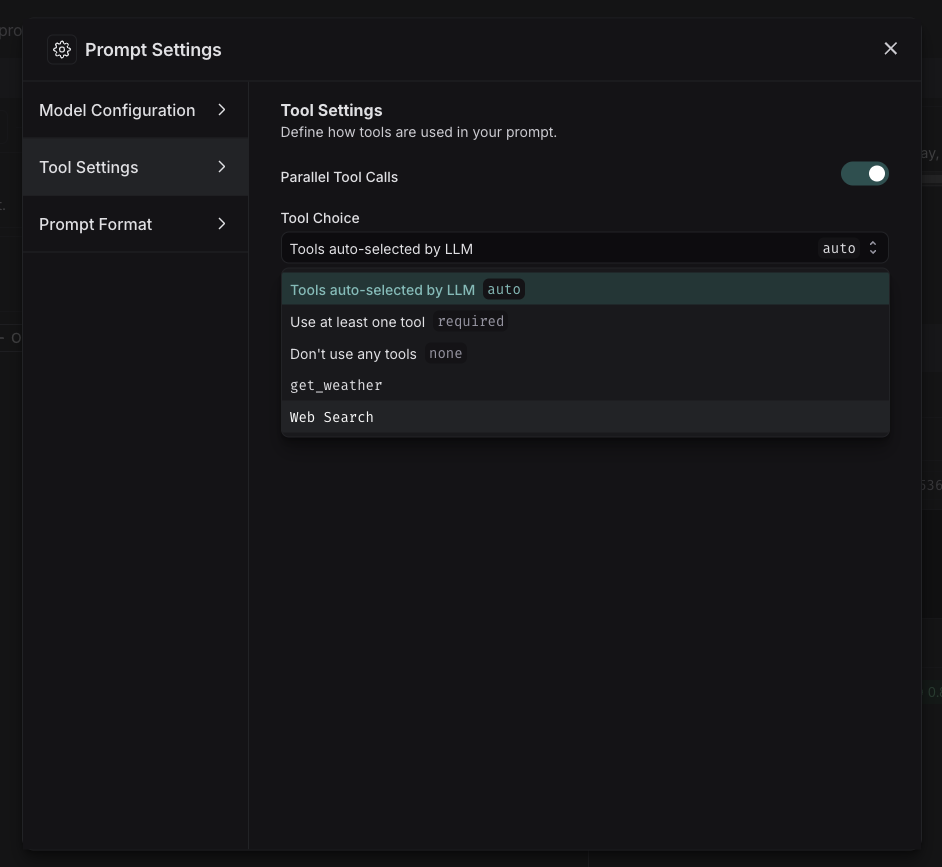

Tool choice settings

Some models provide control over which tools are called. To configure this:- Go to prompt settings

- Navigate to tool settings

- Select tool choice